Experience Optimization and content testing FAQ

Frequently asked questions about running content tests.

Here are some frequently asked questions about using Experience Optimization and content testing to create and run content tests.

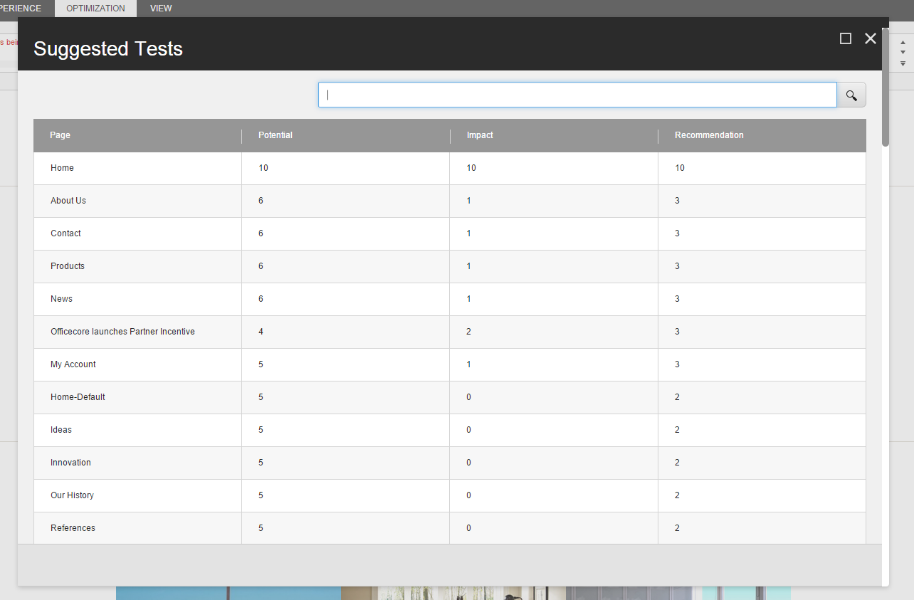

The Suggested Tests dialog shows you the pages that you should test to gain the most potential value.

The following metrics determine if it is a good idea to test a page, using normalized scores of between 1 and 10:

Potential – a score that suggests which pages have a high potential for increasing the engagement for the users visiting the page

Impact – the expected level of impact of the test

Recommendation – a combination of potential and impact. This shows you how strong the recommendation is to optimizing a page.

|

Sitecore calculates the number of visitors needed to validate a test. This number depends on the number of experiences that you are testing and the anticipated confidence level of the test.

When starting a test, you can see the number of visits needed for a statistically significant result. If there is historical visitor data available for a page, you see the expected number of days that the test should run before it reaches a valid result.

Historical data for the last month lets you predict the needed number of visits to achieve a significant result for the test. Website traffic, however, can vary over time, and this can have an effect on the forecast's accuracy.

The method validates that one of the experience has performed significantly better than the original and declares a winner when the needed number of visitors has been reached.

Yes, the test will still run. You should allow tests to run until the suggested end date. This ensures that you have data for each day of the week, which can affect the test results and provide insight into activity on your websites.

If the test's end date passes without a statistically significant result, the test is suspended.

Experience Optimization forecasts how long the test should run, but you may want to adjust the time frame of the test in the configuration file. For example, you might want to do this if you are making significant changes to your website that will affect traffic to the pages being tested. For example, you could add a new campaign that would increase traffic to a page and this could influence the result of your test.

In general, it is best practice to specify a maximum duration for a test because some search engines interpret indefinitely tested content as an attempt to negatively influence search results.

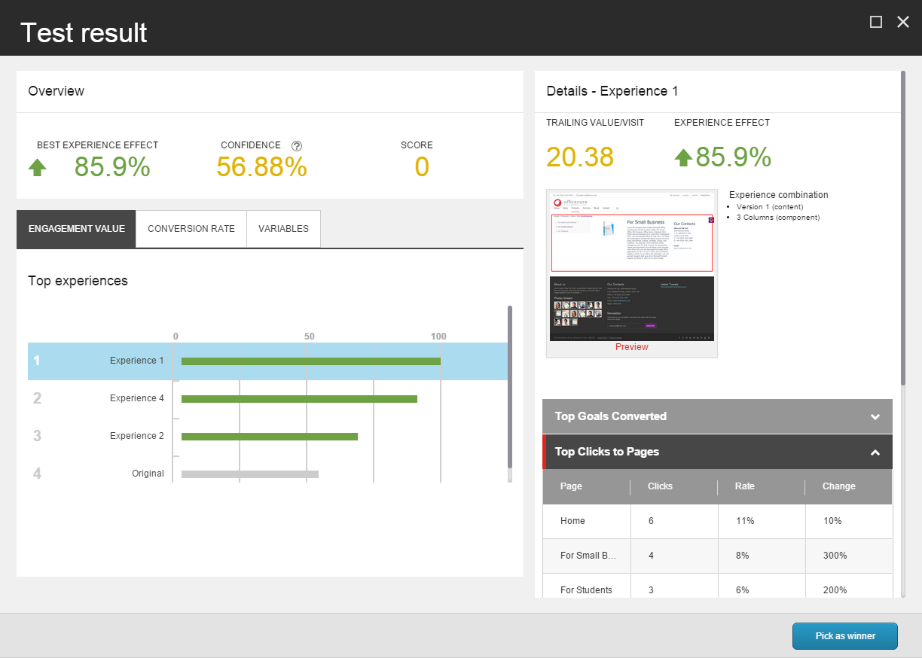

The Test results overview provides information about how your tests are performing. There are three metrics that give you insight into a test's performance. They are:

Experience effect – the relative change in engagement value since the test began

Confidence – the statistical confidence level

Score – product of the effect and number of visits in a month

|

In this example, the test has an 85.9% experience effect. This means that the engagement value generated by the content has increased by 85.9% over the original. The confidence rate is 56.88%, indicating that the result is not statistically significant and the test is probably unfinished.

|

Effect shows how much the engagement value of the contacts exposed to the tested content has changed. If the original version of the page has the highest engagement value, the effect score is 0, as the test has had no effect.

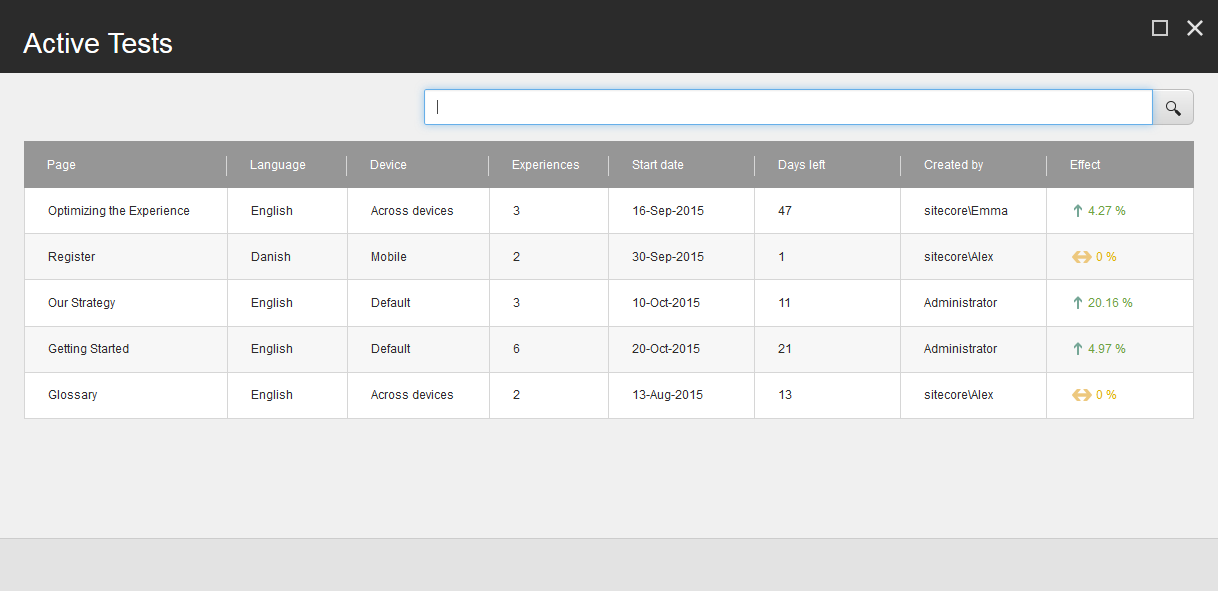

Yes. You can create device-specific A/B and multivariate tests. Content tests, however, run across all of the relevant devices.

Yes. You can start a component or personalization test without a workflow. You may want to do this if your organization does not use workflows to approve content or tests for your website.

Yes. There are several security roles that provide access to different levels of functionality in Experience Optimization.

Authoring – enables the creation, running, and editing of tests.

You typically assign this role to content authors and marketers.

Analytics Advanced Testing – contains the same access as the Authoring role, plus additional tabs and controls.

You typically assign this role to marketing analysts.

Analytics Management Reporting – has full access to all content testing dashboards and historical reports and cannot create tests.

You typically assign this role to marketing directors.

An administrator can also add security roles to individual users to give them access to Experience Optimization.